Start with Databricks

Integration of Databricks with Entropy Data.

Best Practices: Data Products in Databricks

A data product is a logical container of managed data that you want to share with other teams. The data product can have multiple output ports representing the specific datasets with data model, version, environment, and technology.

With Databricks Unity Catalog, we have three layers to organize data. When working with data products, we need a convention to define what are the internal data and what are the tables that are part of the output ports.

A typical convention could look like this:

- Catalog:

<domain_name>_<team_name>_<environment>- Schema:

dp_<data_product_name>_op_<output_port_name>_v<output_port_version>- Tables and views that are part of the output port

- Schema:

dp_<data_product_name>_internal- Internal source, intermediate, and staging tables that are necessary to create the output port tables

- Schema:

Adopt this to your needs and make sure to document it as Global Policy.

A Unity Catalog is often created for a specific organization, business unit, domain, or team. Some organizations also have one catalog per data product.

A schema should be created for each output port with a clear naming convention. The output_port_name can be omitted if the output port name is equal to the data product name.

Follow your organization's naming conventions for table names.

Example

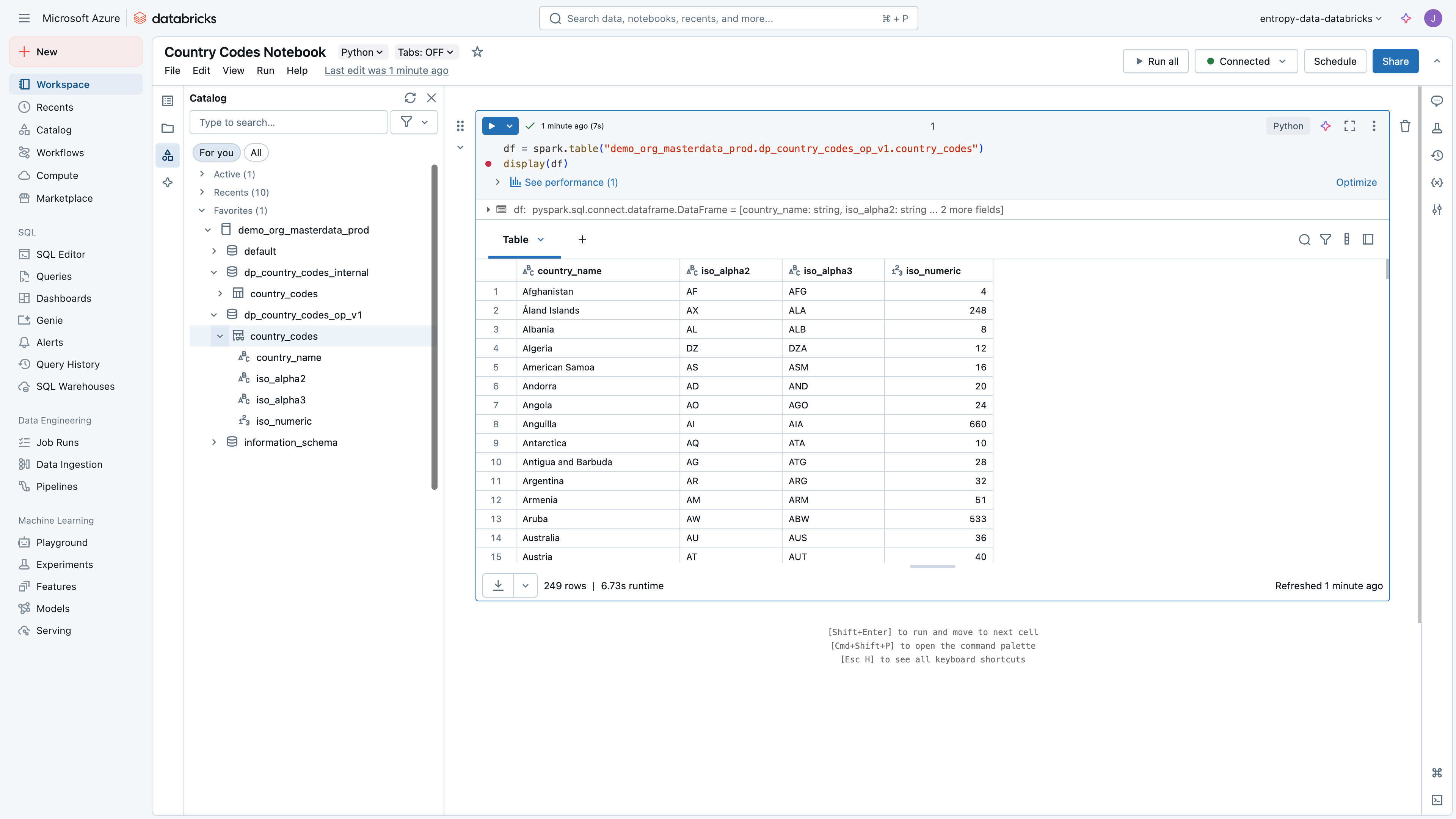

Let's imagine, we have a table country_codes in databricks Unity Catalog, and we want to create a data product for it.

In our example, we would have this structure:

- Catalog:

demo_org_masterdata_prod- Schema:

dp_country_codes_op_v1- Table:

country_codes

- Table:

- Schema:

dp_country_codes_internal- Table:

country_codes

- Table:

- Schema:

Conventions might be different, the core idea is to have one schema per output port. Having a schema per output port allows to share one or multiple tables, while keeping the permission model simple, and keeping the internal tables private.

Add Data Product to Entropy Data

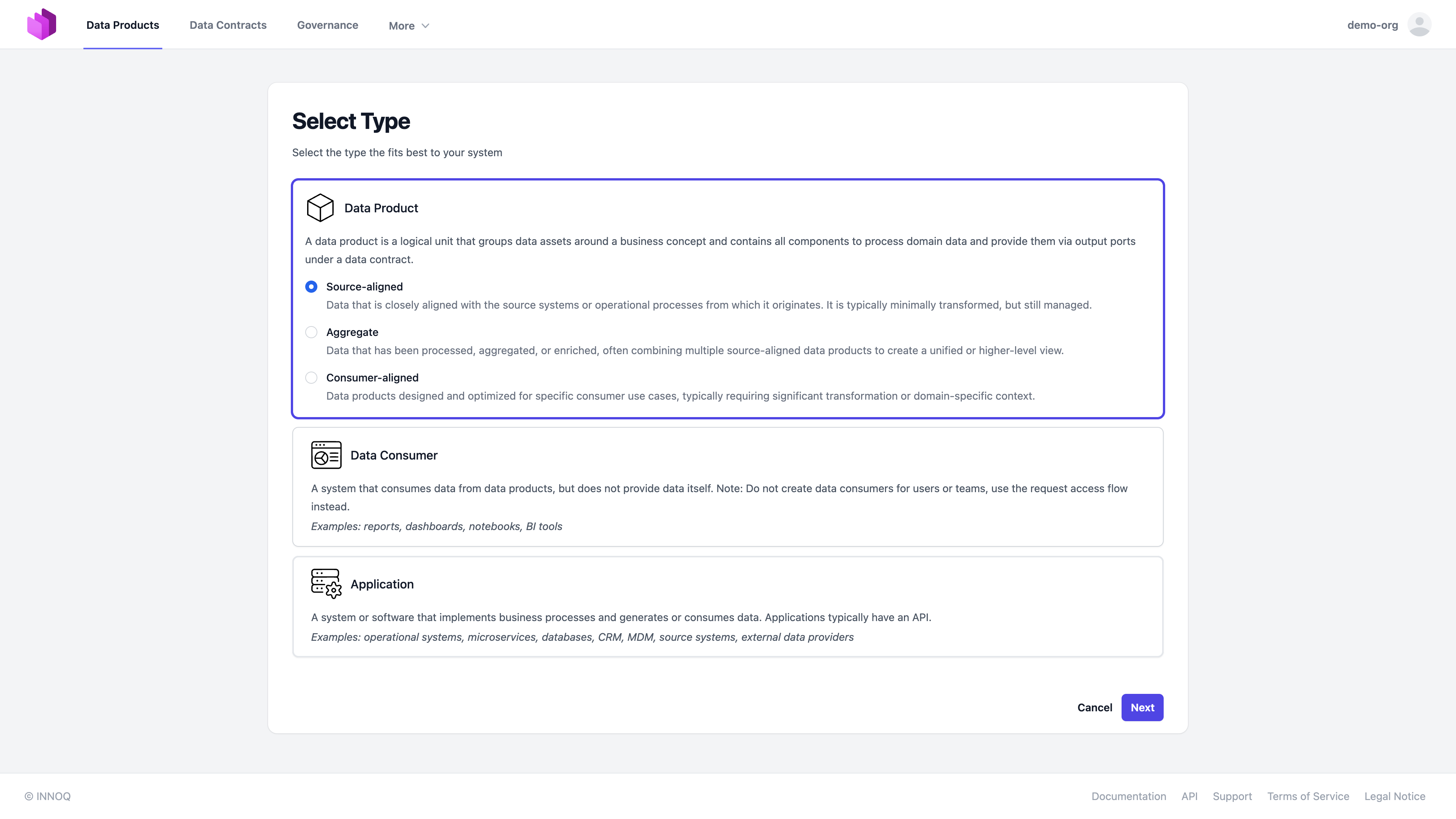

Now, let's register the data product in Entropy Data. We start using the Web UI, and we will later look at how this process can be automated with API.

Log in to Entropy Data, go to the Data Products page, and select "Add Data Product" > "Add in Web UI".

Our Data Product is source-aligned, as we don't have business-specific transformations, so we select "Source-aligned" as the type.

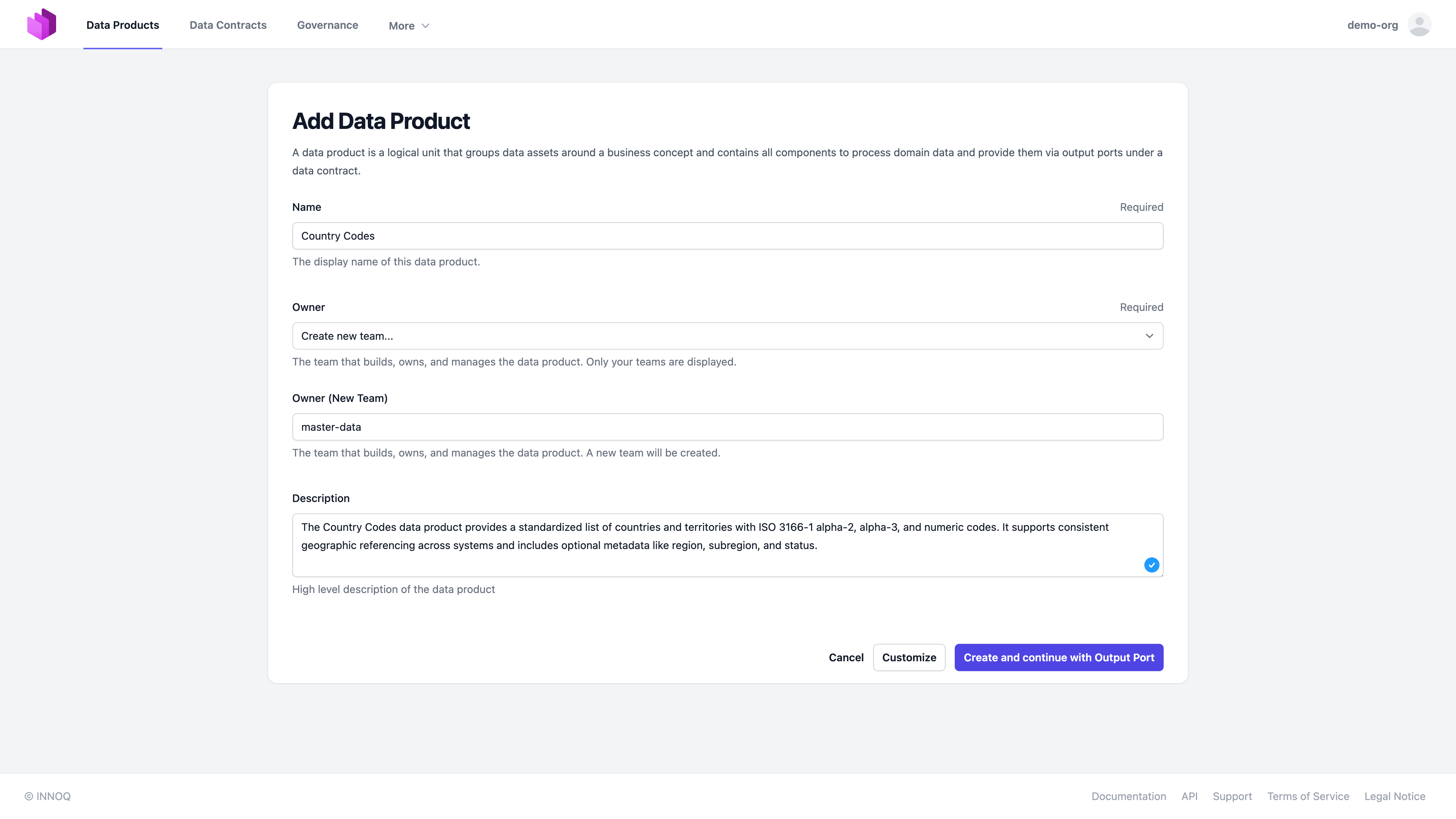

In the next step, we need to select the data product name and a team that owns the data product. We can create a new team or select an existing one.

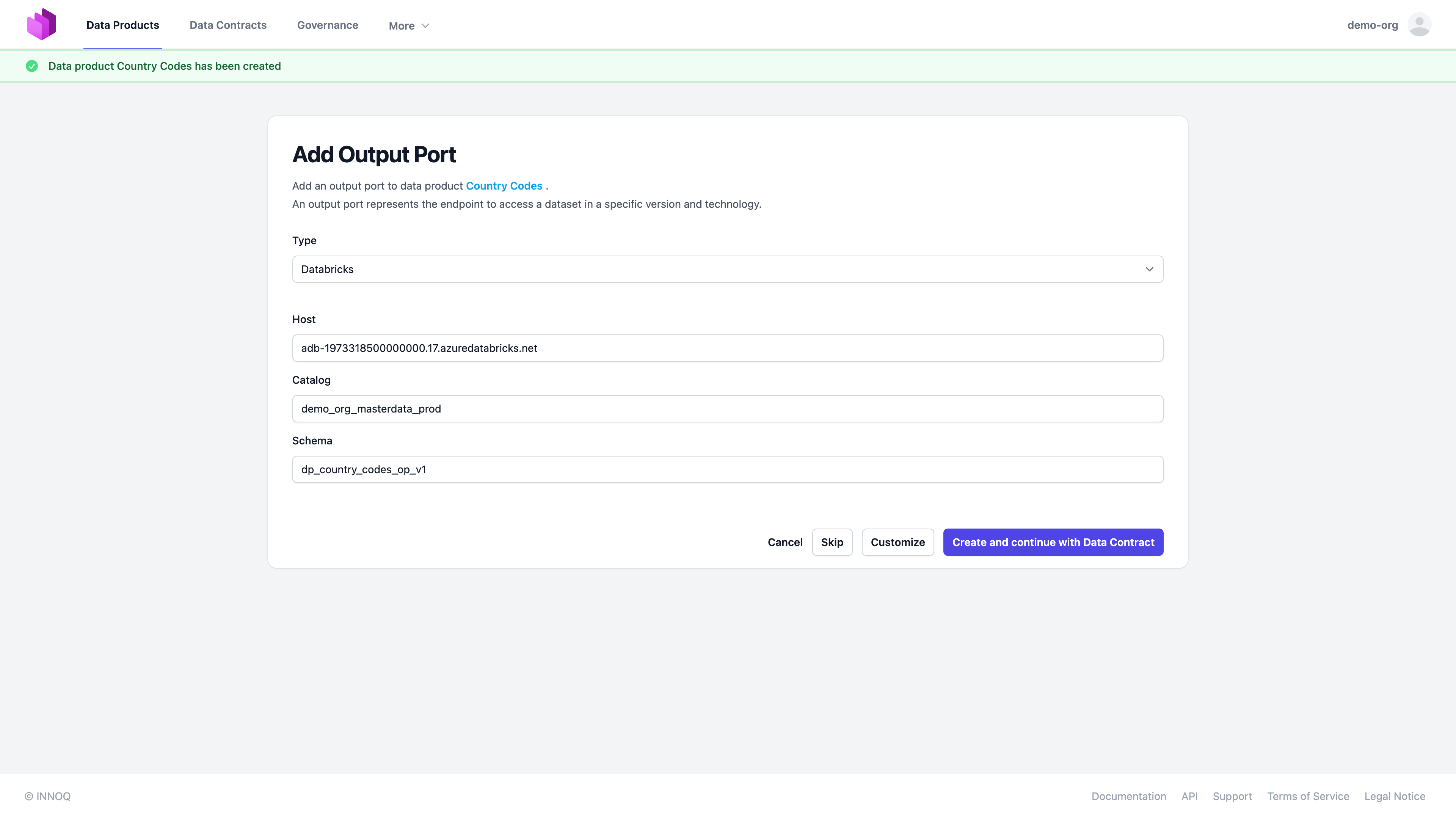

And we select the output port type and point to our databricks catalog and schema.

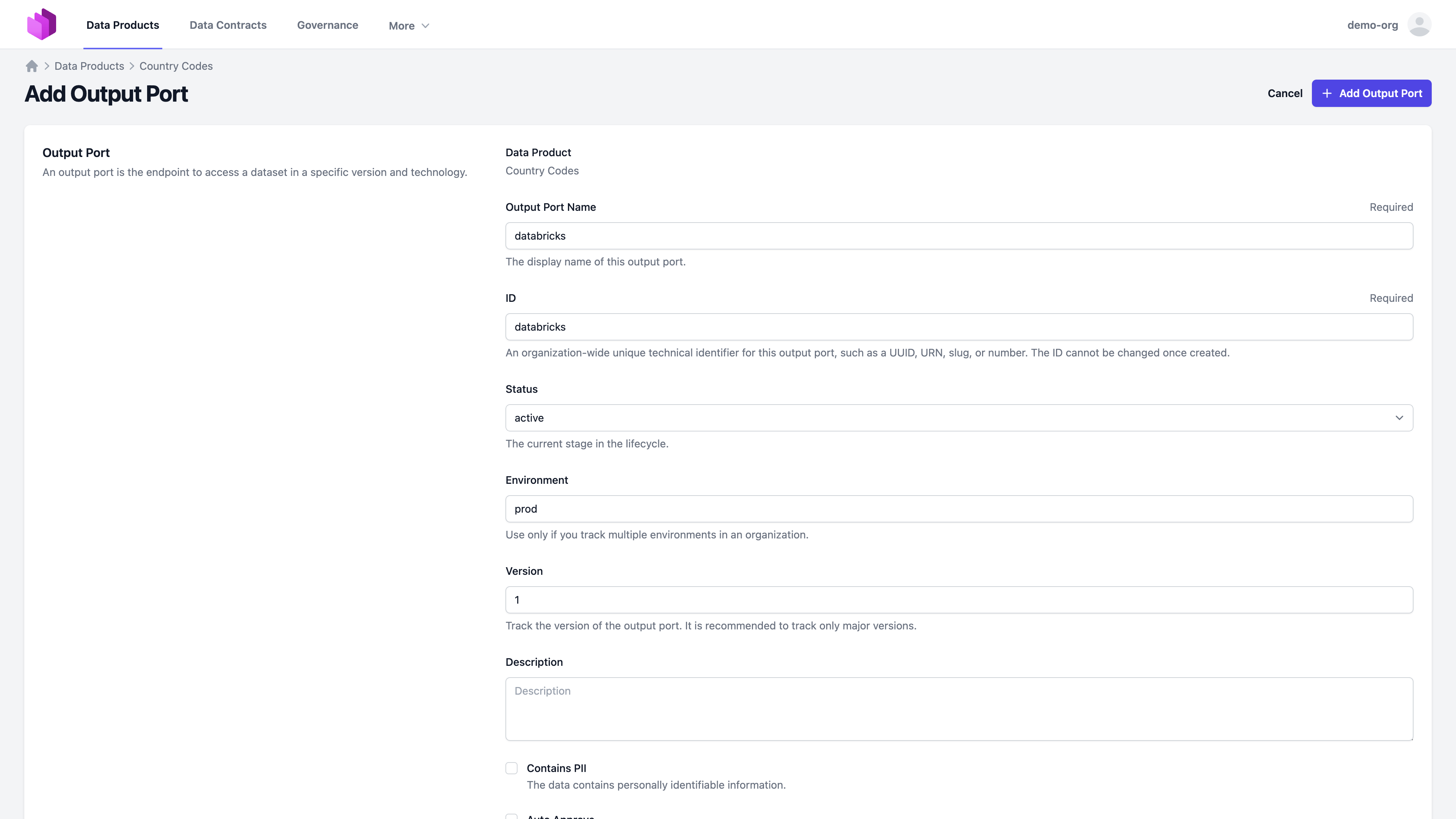

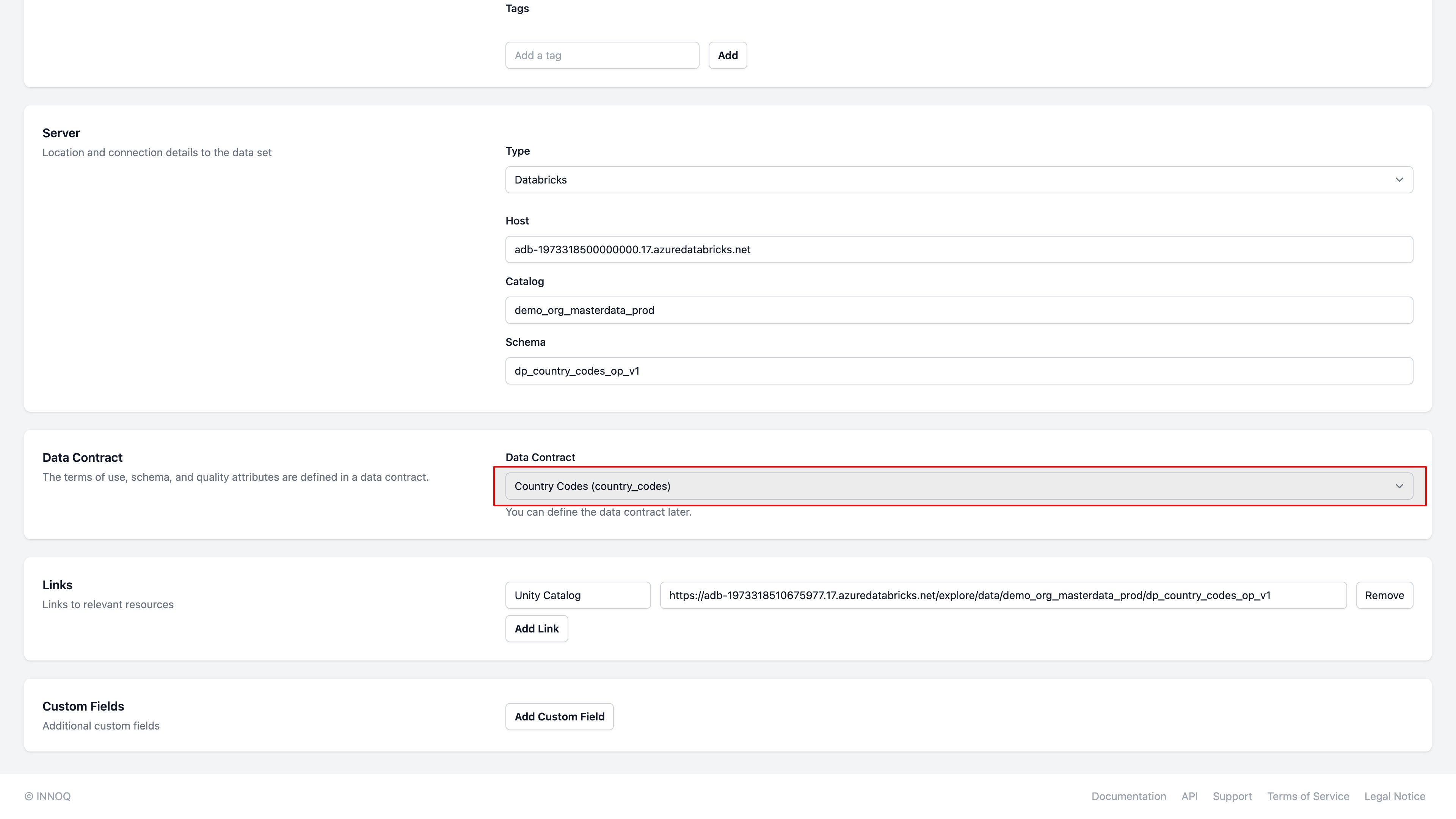

Select "Customize", and configure the output port status, environment, and version. Also, it is good practice to add a link to the Databricks Unity Catalog Web UI for the schema to have a quick navigation option.

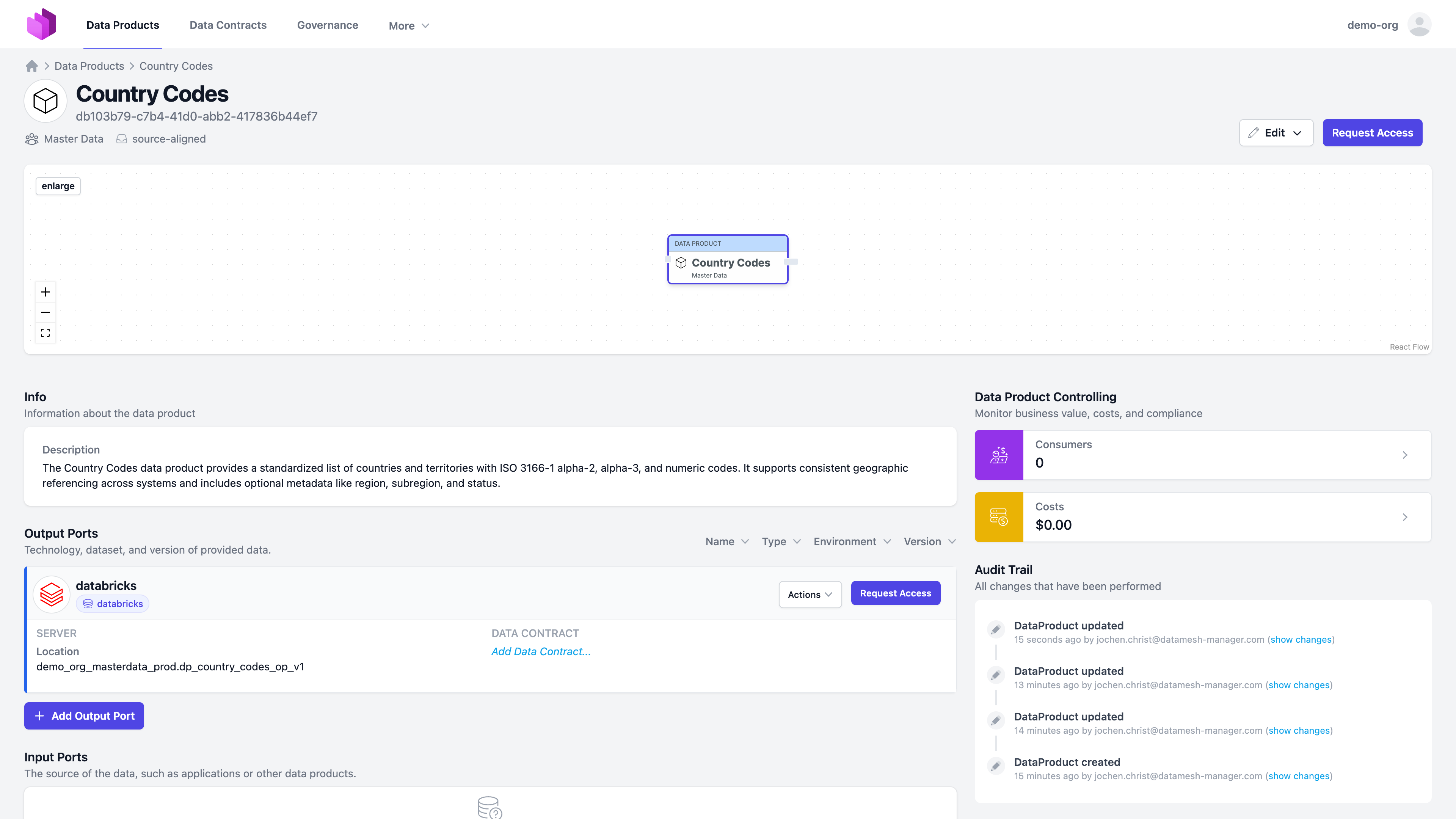

We have now created our first data product:

Create a Data Contract

Next step is to create a data contract.

There are several ways to create a data contract:

- YAML editor

- Entropy Data Web UI

- Data Contract CLI

- Data Contract CLI in a Notebook as Python Library

- Assets Synchronization

- Excel Template

As we already have the table in Databricks, in this tutorial, we use the Data Contract CLI to create a base data contract that we can modify and use.

Installation

Follow the instructions to install Data Contract CLI: https://cli.datacontract.com/#installation

Access Tokens

Create an API Key for Entropy Data and Access Token for Databricks. Configure these as environment variable in your terminal

export DATAMESH_MANAGER_API_KEY=dmm_live_5itWG8DrT1EqnmJhmxbp0rMaVcaoE8iHfLPHIY6EAQ8I8oOXbFWgi3aACEBQIjk0

# User -> Settings -> Developer -> Access tokens -> Manage -> Generate new token

export DATACONTRACT_DATABRICKS_TOKEN=dapi71c9aef2292708947xxxxxxxxxx

# Compute -> SQL warehouses -> Warehouse Name -> Connection details

export DATACONTRACT_DATABRICKS_SERVER_HOSTNAME=adb-1973318500000000.17.azuredatabricks.net

# Compute -> SQL warehouses -> Warehouse Name -> Connection details

export DATACONTRACT_DATABRICKS_HTTP_PATH=/sql/1.0/warehouses/4d9d85xxxxxxxx

Import with Data Contract CLI

datacontract import \

--format unity \

--unity-table-full-name demo_org_masterdata_prod.dp_country_codes_op_v1.country_codes \

--output datacontract.yaml

Modify

We now have a basic data contract with the data model schema.

Let's update it in an editor to give it a proper ID, name, owner, and some more context information. Note that editors such as VS Code or IntelliJ have auto-completion for the Data Contract schema.

dataContractSpecification: 1.1.0

id: country_codes

info:

title: Country Codes

version: 0.0.1

owner: master-data

models:

country_codes:

description: ISO codes for every country we work with

type: table

title: country_codes

fields:

country_name:

type: string

iso_alpha2:

type: string

iso_alpha3:

type: string

iso_numeric:

type: long

Push to Entropy Data

Now we can upload the Data Contract to Entropy Data.

datacontract publish datacontract.yaml

This is a one-time operation for the initial import. Now the data contract becomes the source-of-truth for the data model and meta-data.

Later, we will configure a Git synchronization to automatically push the data contract to Entropy Data and fetch changes back.

Assign to Output Port

Navigate to the Data Product in Entropy Data, and "Edit Output Port"

and select the data contract we just created:

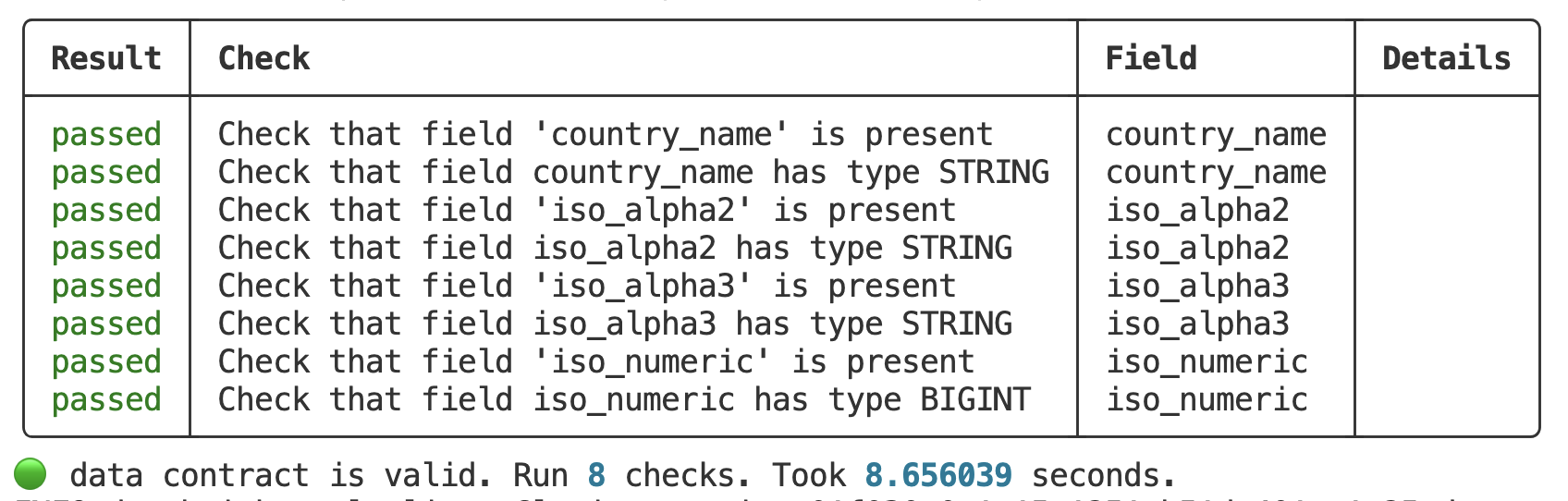

Test and Enforce Data Contract (CLI)

Now, we can test and enforce that the data product is compliant with the data contract specification.

datacontract test https://app.datamesh-manager.com/demo-org/datacontracts/country_codes \

--publish https://api.entropy-data.com/api/test-results

We now can see in the console and on the Entropy Data Web UI that all tests are passing.

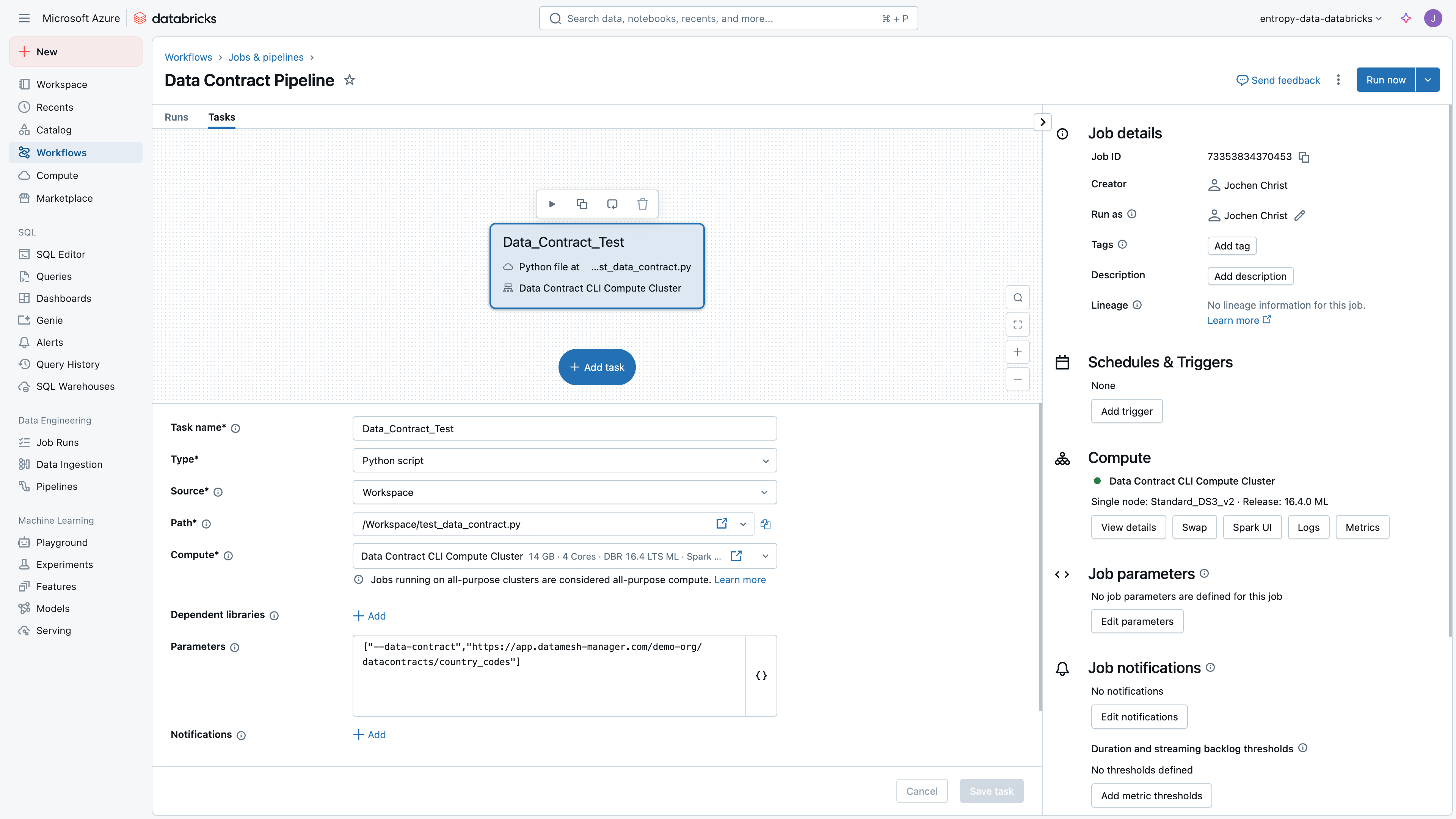

Test and Enforce Data Contract (Databricks Job)

Of course, we want to check data contract, whenever the data product is updated. For this, we can integrate the test as a task in your data product pipeline or as a scheduled task.

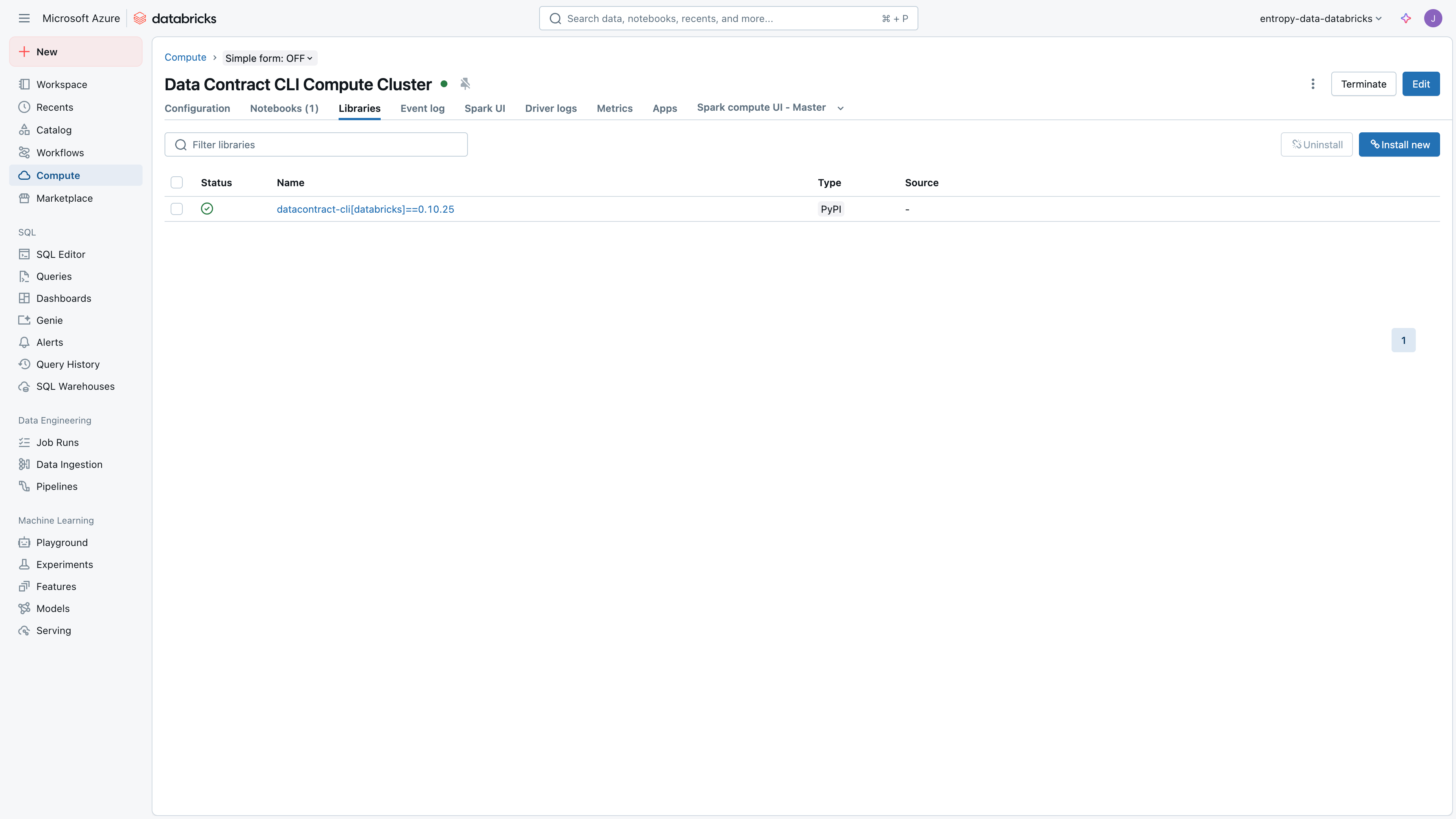

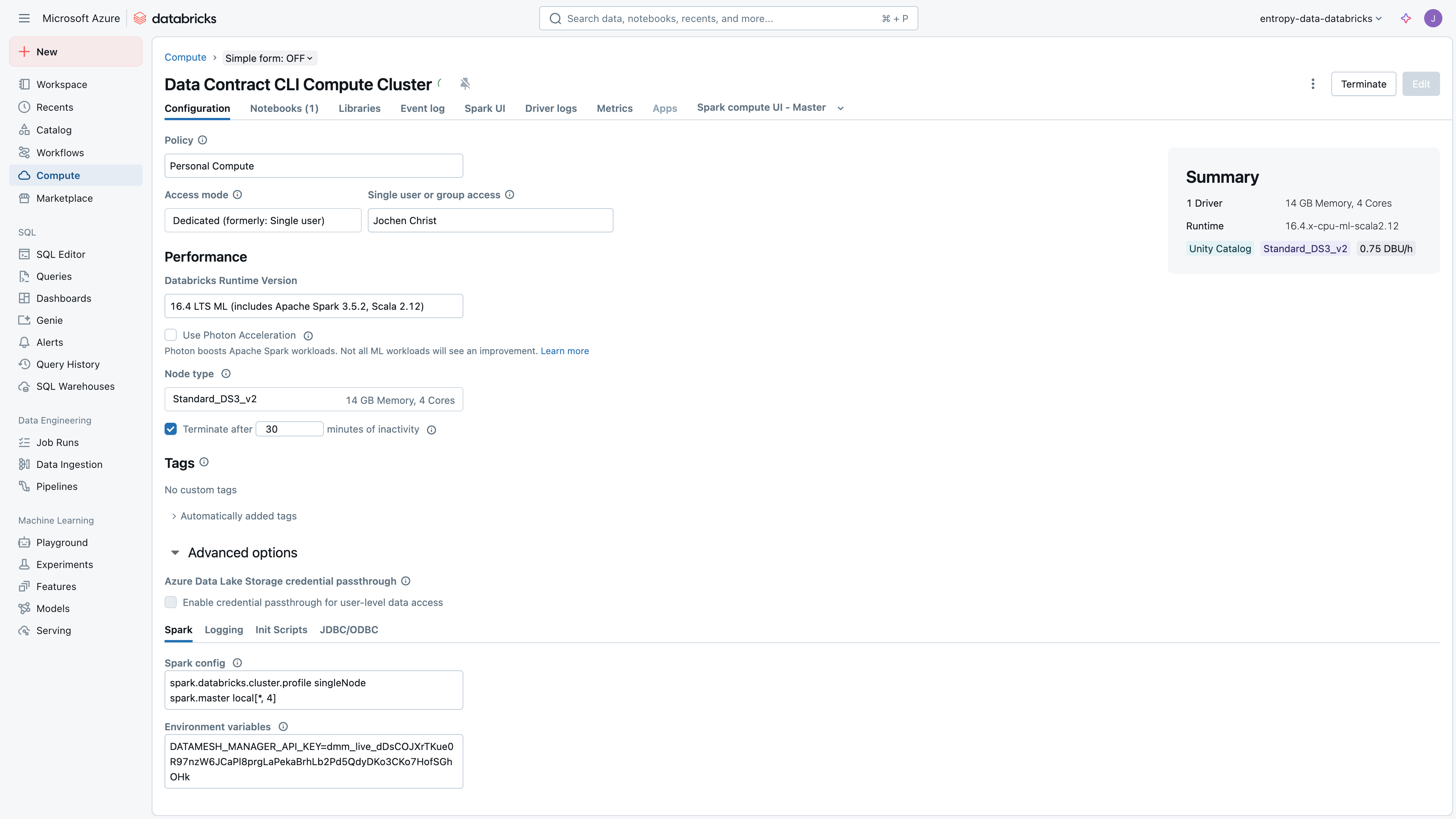

Compute Cluster Configuration

To efficiently use the Data Contract CLI, it is recommended to create a compute resource in Databricks with the Python library installed.

Also, create an API Key and set the environment variable DATAMESH_MANAGER_API_KEY to the API key value in the compute environment variable configuration.

Python Script

We can use this Python script to run the tests with Databricks' Spark engine. Save this script as test_data_contract.py in your Databricks workspace.

import argparse

from datacontract.data_contract import DataContract

def main():

# Set up argument parser

parser = argparse.ArgumentParser(description='Validate data quality using a data contract')

parser.add_argument('--data-contract', required=True,

help='URL or path to the data contract YAML')

# Parse arguments

args = parser.parse_args()

# Initialize data contract

data_contract = DataContract(

spark=spark, # Assuming spark is available in the environment

data_contract_file=args.data_contract,

publish_url="https://api.entropy-data.com/api/test-results"

)

# Run validation

run = data_contract.test()

# Output results

if run.has_passed():

print("Data quality validation succeeded.")

print(run.pretty())

return 0

else:

print("Data quality validation failed.")

print(run.pretty())

raise Exception("Data quality validation failed.")

Pipeline Task

Configuration of the pipeline task:

Using the Entropy Data Databricks Connector

To sum up shortly, what we have done so far:

- Created a data product in Entropy Data

- Created a data contract with the Data Contract CLI

- Assigned the data contract to the data product

- Tested the data contract with the Data Contract CLI

- Automated quality testing with a Databricks job

Two things are still missing to complete the integration: Entropy Data allows you to manage access requests to Data Products. We want to automatically create the permissions in Databricks, when a data owner accepts the access request in Entropy Data.

Another feature is to link data assets from the Unity Data Catalog with Data Products.

For those two integrations, we provide the Entropy Data Databricks Connector.

Features:

- Access Management: Listen for AccessActivatedEvent and AccessDeactivatedEvent in Entropy Data and automatically grants access on Databricks to the data consumer as a self-service.

- Asset Synchronization: Sync tables and schemas of the Unity catalog to Entropy Data as Assets.

Deploying the Entropy Data Databricks Connector

The Entropy Data Databricks Connector is available as an open-source project on GitHub, and is available as a Docker image on Docker Hub. If needed, you can fork the project and customize it to your needs.

To get started, we need to set up docker to run the connector. Beforehand you will need to get some secrets and configuration data from Databricks to enable the Connector to fetch the correct data.

You find an overview of the configuration parameters in the README of the connector.

DATAMESHMANAGER_CLIENT_APIKEY: API Key for Entropy Data (see: Authentication)DATAMESHMANAGER_CLIENT_HOST: URL of the Entropy Data API (default:https://api.entropy-data.com/api)DATAMESHMANAGER_CLIENT_DATABRICKS_WORKSPACE_HOST: URL of the Databricks workspace (e.g.adb-1973318500000000.17.azuredatabricks.net, see Databricks Authentication)DATAMESHMANAGER_CLIENT_DATABRICKS_WORKSPACE_CLIENTID: Client ID of the Databricks workspaceDATAMESHMANAGER_CLIENT_DATABRICKS_WORKSPACE_CLIENTSECRET: Client Secret of the Databricks workspaceDATAMESHMANAGER_CLIENT_DATABRICKS_ACCOUNT_HOST: URL of the Databricks account (e.g.https://accounts.azuredatabricks.com, see Databricks Account Settings)DATAMESHMANAGER_CLIENT_DATABRICKS_ACCOUNT_CLIENTID: Client ID of the Databricks accountDATAMESHMANAGER_CLIENT_DATABRICKS_ACCOUNT_CLIENTSECRET: Client Secret of the Databricks account

You can use different service principals (hence, different Client IDs and Client Secrets) for the workspace and account authentication. The connector will use the workspace authentication to fetch the data from Databricks and the account authentication to manage authentication.

The service principal for the workspace authentications needs to have the USE CATALOG, USE SCHEMA, and MODIFY permissions.

If you have all configuration in place, you can now start the connector with the following command. Adopt these if you run the container in managed container environments like Kubernetes, Azuer Container Apps, or AWS EKS.

docker run \

-e DATAMESHMANAGER_CLIENT_APIKEY='dmm_live_5TJYRn6CrsWELldNiffii6oKdYNMuEYWinBoOoxRrvXaLW4y9A5Xck12as9dasw9' \

-e DATAMESHMANAGER_CLIENT_DATABRICKS_WORKSPACE_HOST='https://adb-1973318500000000.17.azuredatabricks.net' \

-e DATAMESHMANAGER_CLIENT_DATABRICKS_WORKSPACE_CLIENTID='d2e11498-4b63-43cd-9ebe-a00000000000' \

-e DATAMESHMANAGER_CLIENT_DATABRICKS_WORKSPACE_CLIENTSECRET='dose79375ba79041c9c5250a190002a71cd9' \

-e DATAMESHMANAGER_CLIENT_DATABRICKS_ACCOUNT_HOST='https://accounts.azuredatabricks.net' \

-e DATAMESHMANAGER_CLIENT_DATABRICKS_ACCOUNT_ACCOUNTID='4675280120000000' \

-e DATAMESHMANAGER_CLIENT_DATABRICKS_ACCOUNT_CLIENTID='d2e11498-4b63-43cd-9ebe-a00000000000' \

-e DATAMESHMANAGER_CLIENT_DATABRICKS_ACCOUNT_CLIENTSECRET='dose000000000000c9c5250a190002a71cd9' \

datameshmanager/datamesh-manager-connector-databricks:latest

After a successful start of the connector, you will find the following logs for your Connector Docker container:

2025-05-15T07:42:39.596Z INFO 1 --- [cTaskExecutor-1] d.sdk.DataMeshManagerEventListener : databricks-access-management: Start polling for events

2025-05-15T07:42:39.663Z INFO 1 --- [cTaskExecutor-1] d.sdk.DataMeshManagerEventListener : Fetching events with lastEventId=1f030d95-7eeb-67c4-b68c-7f053555cc14

2025-05-15T07:42:39.726Z INFO 1 --- [ main] d.s.DataMeshManagerConnectorRegistration : Registering integration connector databricks-assets

2025-05-15T07:42:39.748Z INFO 1 --- [cTaskExecutor-1] d.sdk.DataMeshManagerEventListener : Processing event 1f030d95-7f45-6d15-b68c-9fcae55fcd9a of type com.datamesh-manager.events.AccessDeactivatedEvent

2025-05-15T07:42:39.753Z INFO 1 --- [cTaskExecutor-1] d.d.DatabricksAccessManagementHandler : Processing AccessDeactivatedEvent 5sHEK6r9PydbE5CVpSk9BN

2025-05-15T07:42:39.789Z INFO 1 --- [cTaskExecutor-2] d.sdk.DataMeshManagerAssetsSynchronizer : databricks-assets: start syncing assets

The connector will subscribe to the Events API and save the current state (lastEventId) directly in Entropy Data.

Using Access Management

The connector will automatically create the permissions in Databricks for approved Access Requests for a Data Product's Output Port in Entropy Data.

Look at the documentation, how the access management flow works in detail.

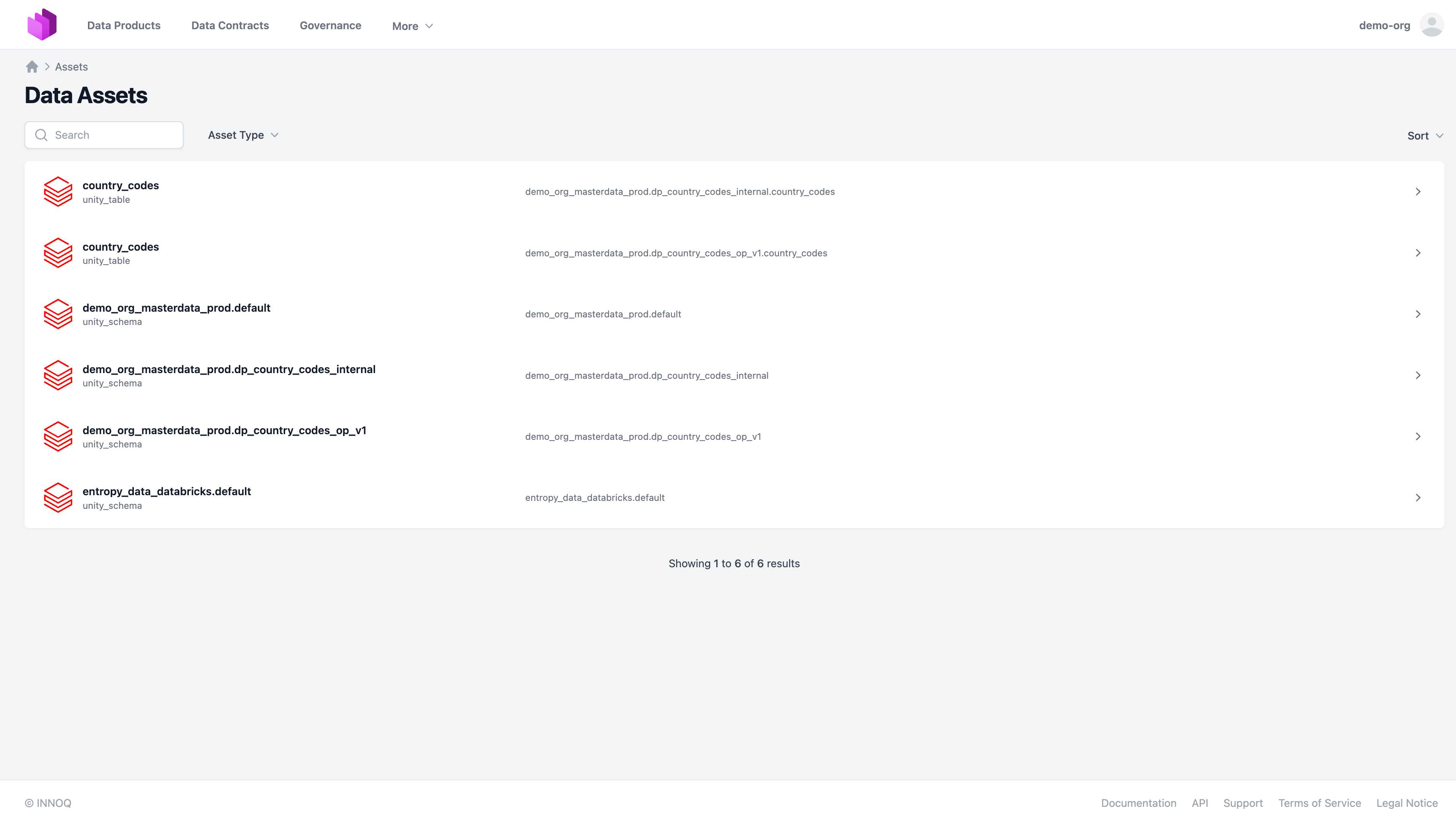

Using Asset Synchronization

Assets are representations of actual data structures in Databricks. You can leverage them to import models and schemas from Databricks into Entropy Data. The connector will automatically create the assets in Entropy Data. You can link them with dataproducts and build derive Data Contracts from them.

The connector will automatically create assets in Entropy Data for all tables and views in the Databricks Unity Catalog.

For our example environment demo_org_masterdata_prod, the connector will create the following assets:

Recommendation: Databricks Asset Bundle

Databricks Asset Bundles are a great way to build professional data products on Databricks. They include all artefacts, such as code, pipelines, DLT definitions, tests, and metadata to build and deploy data prpducts.

We have another tutorial that shows how to use the Databricks Asset Bundle with Entropy Data. You can find it on: https://www.datamesh-architecture.com/howto/build-a-dataproduct-with-databricks